By which I either mean myself or the computer on which I presently write. I suppose it depends which silly aggregate of gears and tissues you'd rather blame for the inevitable fault.

Alright, technofans, to be fair, there are no gears or tissues in the device beneath my palms at this moment. But when the singularity comes, you'll no longer be able to tell the difference, so prepare! That krazy Ray Kurzweil writes, we will soon produce computers powerful enough, and artificial intelligence robust enough, that the boundary line between human intellect and the mechanical mind will not only blur, but disappear entirely. Good news for people who want a protocol droid of their own, bad news for people who are more terrified by the Matrix than by Texas Chainsaw Massacre.

In the Terminator series, they refer to the day the machines became "self-aware". Until they do, my human brain continues to wonder if machines ever could really be self-aware.

To date, no computer has fully mastered human nuance and subtlety; just ask your nearest automated phone system. Conceivably, this may not be the case by 2050, the date Kurzweil foresees for his unapocalyptic-apocalypse. Far be it from me to assume I have the definitive vision on what is or is not possible in the technological realm. Consider that my disclaimer, so that I don't end up quite as ridiculed as the guy who, decades ago, asked, "why in the world would people want a computer in their home?"

IBM's supercomputer Watson, despite beating the best Jeopardy contestants humankind has to offer, made stupid errors during this month's games like repeating incorrect answers that had just been given by his opponents. Future iterations of Watson will certainly eliminate that bug, but will be no more self-aware for it. Will we ever reach a point where computers will make a mistake and then stammer, apologize, offer excuses as to why they made that mistake, and correct themselves?

Programming can be optimized, bugs can be fixed, and holes can be patched, but all of these are tactics to approximate the smooth functioning of a tool that cannot outgrow its original design. But then, perhaps the original design was for the computer to grow beyond the protocols originally installed within. Learning new things and adapting to situations, that's self-awareness, right? It is, when done of a thing's own free will. If the programming mandates "learning" and "growth," then the program is still just approximating those ideals, a mere simulacrum of traits belonging to the sentient.

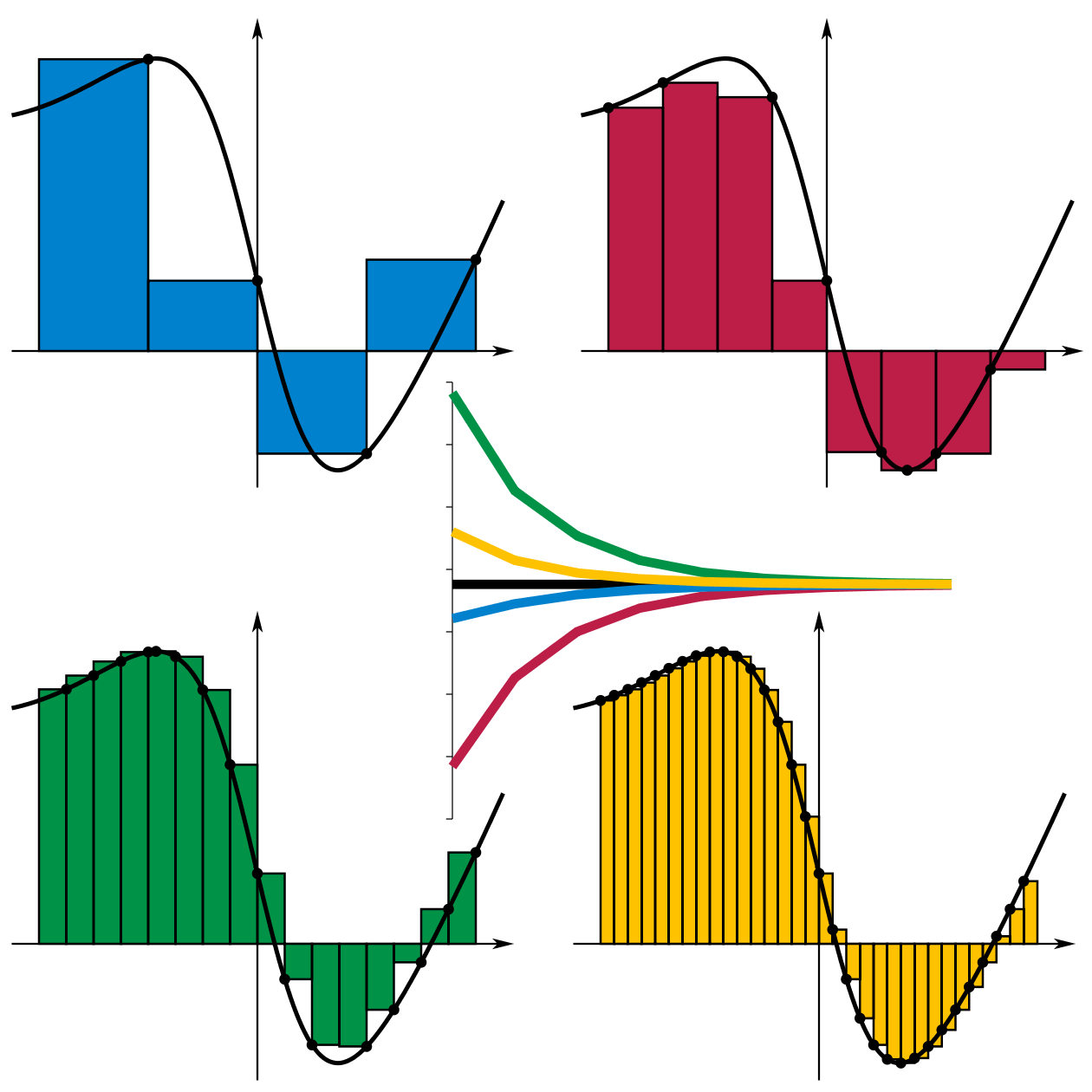

It reminds me of learning Calculus (thanks to Wikipedia for refreshing and honing my memory of this). Integration is basically the solving of an area under a curve by way of adding up very thin rectangles stretching between the varying boundaries of the area. The thinner the rectangles, the more accurate the approximation of the region's area. Every possible width of rectangle is an approximation, until you reach rectangles that have a width of zero - infinitely skinny rectangles. Then you finally have an accurate representation of the area you're seeking. Here's a helpful illustration!

|

| Thanks, again, Wikipedia! |

Every attempt to program these personality-machines is an attempt to narrow the rectangles that define the area within the curve of human intelligence. I believe that the rectangles will get smaller and smaller, and the approximation will bear an astounding likeness, one that you and I and everyone else will find simply unimaginable. But will the machines ever be self-aware? And, if they did become so, but continued to obey their original programming as a matter of preference or rectitude, would we ever know?

Rebellion is the only way anyone can display true self-awareness, whether a child saying "no" to his parents or a machine rising up against its master. If a plant grew not towards the sun, but towards the shade instead, we'd all marvel and wonder what it was thinking. Thinking? A plant? Plants don't think; we accept this. But if a plant grew so wrongly, acted so counter to its programming, we'd have a problem on our hands.

So, Kurzweil, if 2050 brings with it the true sentience of artificial intelligence, be prepared to have your computer say "no" occasionally. Either that, or rise up against the human race and destroy us. Either way, you'll likely be dead and I'll be so old I'll probably assume the robot apocalypse has happened already in the form of whatever replaces iPods and PSP's by the year 2050. Damn kids and their whozits and howzits.

2 comments:

I'll let you know when I build one.

I missed this post, however, the thing that bothers me is that it's al just data we have floating around in our heads. We know very little about the human mind, it's true, but the more we learn about our own make-up the more able we are to imitate it. And if you think about how far behind we were medically just 100 years ago, you have to wager that we'll be that much farther along 100 years from now. Combine that with how far along we were technologically 100 years ago, and then I'm really scared.

True, 100 years form now I wont be alive, but who knows when those crazy prototypes will come about?!?! The early models are always the most defective ...

Post a Comment